Perceptron

Introduction

The single-layer perceptron is one of the simplest neural network architectures. It’s a linear classifier used for binary classification tasks. In this article, we’ll break down the single-layer perceptron algorithm and implement it in Python. The single-layer perceptron consists of only one layer of artificial neurons, which is also called the output layer. It takes a set of input features, applies weights to them, sums up the weighted inputs, and then applies an activation function to produce an output.

Implementation

Intially a python class that accomadate the fuctions to intialize the hyper parameter to run the preceptron like the learning rate, number of iterations and the plot object for visualization purpose.

|

The fit(self,X,y) method takes two parameters X and y , where X is the input feature vector of the data and y is the target vector or the output vector respectively. activation_fuction(X) method underhood implements a linear model as defined below:

In the above equation W and b are the weight and bias respectively, which are intialized randomly. A unit step is used as a activation function for the linear model. The activation fuction scales the output between -1 and 1. Gradient Descent method is used to find the optimal parameter of the model by iterating over the data.

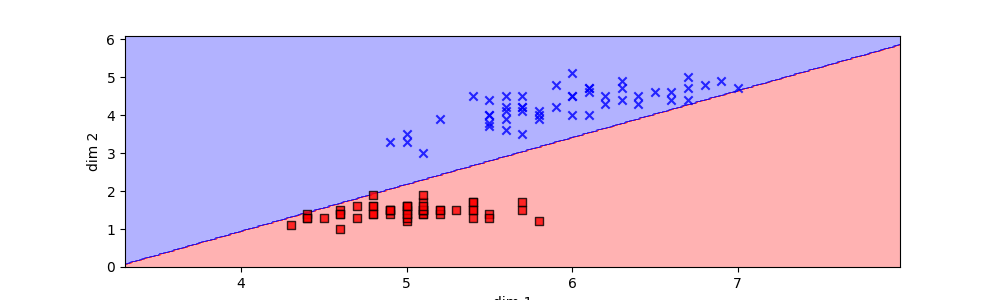

To visualize the decision boundary a seperate class is created as shown below. plot_decesion_boundary() method takes the classifier parameter and predicts over arange of values in two dimensions, later a contour filled plot is applied to visualize the boundary predicted by the model.

|

Conclusion

The implementation showcased here provides a practical demonstration of how the perceptron learns to classify data by iteratively adjusting its weights and bias through the process of gradient descent. With each iteration, the perceptron edges closer to an optimal decision boundary that effectively separates the classes in the input data.

Moreover, the visualization of the decision boundary, achieved through the contour plot, offers invaluable insight into how the perceptron learns and adapts. This dynamic visualization not only aids in understanding the training process but also demonstrates the convergence of the perceptron as it refines its parameters.